- How to Adjust X and Y Axis Scale in Arduino Serial Plotter (No Extra Software Needed)Posted 2 months ago

- Elettronici Entusiasti: Inspiring Makers at Maker Faire Rome 2024Posted 2 months ago

- makeITcircular 2024 content launched – Part of Maker Faire Rome 2024Posted 5 months ago

- Application For Maker Faire Rome 2024: Deadline June 20thPosted 6 months ago

- Building a 3D Digital Clock with ArduinoPosted 11 months ago

- Creating a controller for Minecraft with realistic body movements using ArduinoPosted 12 months ago

- Snowflake with ArduinoPosted 12 months ago

- Holographic Christmas TreePosted 12 months ago

- Segstick: Build Your Own Self-Balancing Vehicle in Just 2 Days with ArduinoPosted 1 year ago

- ZSWatch: An Open-Source Smartwatch Project Based on the Zephyr Operating SystemPosted 1 year ago

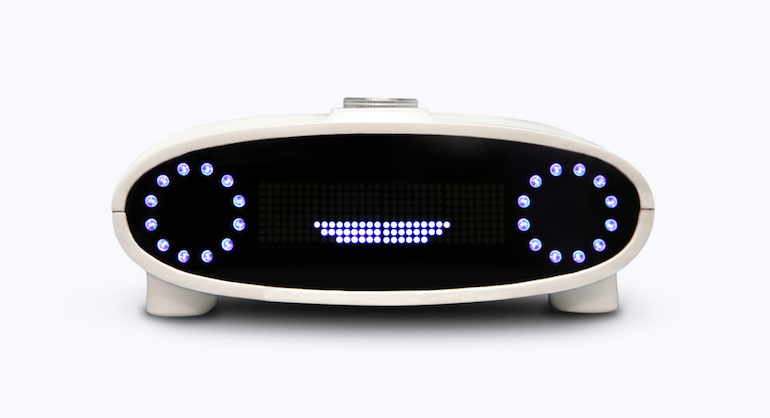

Meet Mycroft, the open source AI who wants to rival Siri, Cortana, and Alexa

“It was inspired by the Star Trek computers, by Jarvis in Iron Man,” Montgomery told ZDNet. He wanted to create the type of artificial intelligence platform that “if you spoke to it when you walked in the room, it could control the music, control the lights, the doors” and more.

After investigating the state of open-source voice control systems on which he could build the platform, Montgomery found there was work to be done. “It turned out there was a complete void. Open source software doesn’t have an equivalent to Siri, Alexa, or Cortana,” Montgomery said.

Last year, after building the AI system and setting up a company to commercialise it, the decision was taken to seek crowdfunding. But, as “software projects seldom get funded on Kickstarter,” said Montgomery, the company decided to build a reference device to go with its software platform: a hub device based on Raspberry Pi 3 and Arduino.

The company’s Kickstarter went off: it raised over $127,000, and a subsequent Indiegogo campaign generated another $160,000. The reference devices began shipping to the first buyers at the start of April, and all backers should get their devices by July.

The system is powered by Snappy Core Ubuntu, and is now composed of four software parts: the Adapt Intent Parser, which converts the natural language commands of users into data the system can act on; the Mimic Text to Speech engine; Open Speech to Text; and the Mycroft Core to integrate the parts.

Mycroft has dozens of developers involved (the Mycroft Core is available on Github) and hopes to raise that number to a thousand before too long.

Source: Meet Mycroft – ZDNet